Does more precise information lead to better decisions? Does it matter whether that information is verifiable?

Based on research by Aditya Kuvalekar, Joao Ramos, and Johannes Schneider.

When we make decisions, we often base them on incomplete information. Usually, only part of that information is verifiable. For example, take a doctor who decides if a C-section is necessary. She bases her decision on an ultrasound—an objective and verifiable measure. Yet, she also uses harder-to-verify subjective judgment that builds on her expertise and experience. Similarly, when a country is accused of committing crimes, governments worldwide decide if they should retaliate. To decide, they use information provided by public institutions (verifiable) but also top-secret information from intelligence (unverifiable). When CEOs make decisions on mergers, they consider verifiable accounting data. However, they also use their intuition as managers. The list goes on.

In a world in which the quality of information changes, we may wonder how those changes affect decision-making. Does it matter for outcomes whether the quality of the verifiable information changes or that of the unverifiable information?

When holding decision-makers accountable ex-post, authorities only have access to verifiable information. They wonder whether the agent caused the harm through reckless behaviour. We may naively think that better verifiable information makes it simpler to identify reckless behaviour, leading to better outcomes. After all, decision-makers understand the situation better and can show more reliable evidence of what they have known. In recent work (Kuvalekar, Ramos, and Schneider, 2022), we show this conclusion is flawed.

What do we find?

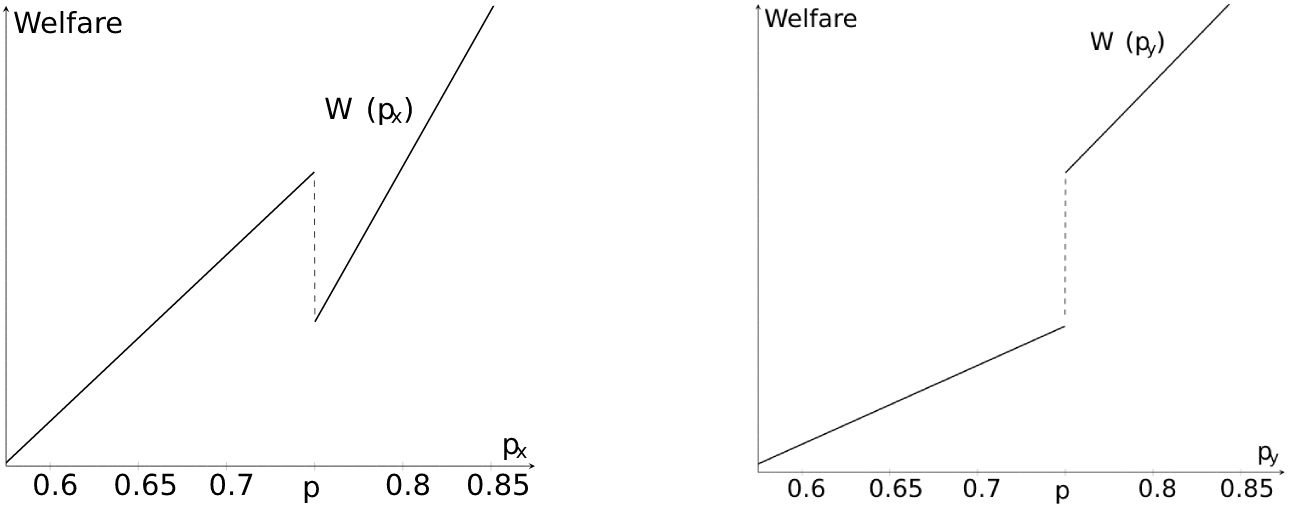

More precise unverifiable information increases welfare if institutions react optimally. More precise verifiable information, on the other hand, may lower welfare regardless of how institutions react.

The reason behind this result lies in the so-called chilling effect: Laws designed to deter reckless actions may have an unintended side-effect. They also prevent reasonable efforts because agents fear being mistaken as reckless ex-post. Improving verifiable information implies a more substantial chilling effect. A strong chilling effect can overthrow the positive impact of better information. Improving unverifiable information means a weaker chilling effect complementing the positive impact of better information. Thus, we should not worry if the unverifiable information of agents improves. Instead, we should be cautious if the verifiable information improves.

The effects of more precision on welfare. The left panel shows welfare as a function of the precision of the verifiable information. As precision goes up, welfare may drop. The right panel shows that welfare is always increasing when the unverifiable information gets more precise.

Laws, Decisions, and Courts

The following abstract setting helps to clearly describe the incentives involved. An individual (the agent) holds information about a particular action. Only some of the information is verifiable. The agent decides whether to take that action. If the agent takes the action and the outcome turns out to be bad, an authority (the court) investigates the agent’s decision. The court sees the agent’s verifiable information and decides whether to punish the agent. Finally, a higher institution (the designer) sets the limits of the punishment upfront.

There are two types of agents: reasonable and reckless. Reasonable agents want to take actions only if they are good. A good doctor wants a C-section only when necessary. A trustworthy government imposes measures only if the other country’s leaders are responsible for the crimes. A reasonable manager approves the merger only if it is profitable for the firm in the long run. Reckless agents, instead, only have selfish motives in mind. A careless doctor performs the C-section regardless, as it is more profitable. An irresponsible government imposes sanctions to signal strength to their voters. A reckless manager completes a merger to obtain a bonus in the short run. After the C-section led to complications, the government turned out to be innocent, or the merger provided low synergies, the court (the voter, the board of directors) decides whether the agent’s decision was an honest mistake or resulted from reckless behaviour. The court’s objective is to punish the reckless and acquit the reasonable. Finally, anticipating the behaviour of agents and courts, the institution limits the punishment to improve aggregate decision-making.

Deterrence and the Chilling Effect

The courts in the model sketched above act only after the agent has taken her action. Rational courts apply the reasonable man standard: Given the odds (and considering a potential punishment), would a reasonable agent have taken the action? If the answer is yes, the court acquits. If the answer is no, the court punishes. The agent anticipates court behaviour in the decision-making and wonders: Given my preferences (reckless or reasonable) and the risk of punishment, should I take the action? Finally, the designer asks: Can I limit the court’s punishment options to induce better decision-making by the agent? Depending on the details of the situation, the results may differ. We could have deterrence of reckless behaviour or not. We may suffer a chilling effect or not.

The Effect of Better Information

The next step is to ask what happens if the information improves. Here, we differentiate between the two kinds of information: verifiable and unverifiable.

Let us first assume that the quality of verifiable information improves. Better verifiable information means the verifiable signal is more trustworthy. A good-looking ultrasound is less ambiguous; a government report finding no irregularities is more comprehensive; accounting data that suggests no synergies covers more branches. As a result, these signals are harder to overturn via an unverifiable signal that recommends the action. The negative verifiable signal deters both the reasonable and the reckless agent from acting against it. However, the reasonable agent is subject to a second force. A negative verifiable signal also means taking the action is more likely to cause harm—an outcome that hurts the reasonable agent but not the reckless one. Thus, all else equal, the chilling effect of deterring the reckless agent becomes stronger in the quality of the verifiable information. The stronger chilling effect can lead to a negative net impact of improved information—welfare declines.

Let us now assume that the quality of the unverifiable information improves. On the one hand, better unverifiable information makes it easier to overturn a verifiable signal. On the other hand, if both the verifiable signal and the unverifiable signal recommend against taking the action, punishment becomes more likely. The first effect weakens the chilling effect—the reasonable agent is more willing to trust her unverifiable information. The second effect strengthens deterrence—the reckless agent is less likely to act if all available information recommends against it. Both effects improve welfare and strengthen the positive impact of better information. Agents make better decisions in expectations—welfare increases.

Reporting Standards and Access to Information

Making verifiable information more precise is one of the main goals of evolving reporting standards. The paradigm of evidence-based medicine requires doctors to run various ex-post verifiable tests. Calls for transparency require governments to conduct publicly verifiable investigations before taking action. Due diligence requests management to write extensive reports before executing the merger. Our finding provides a cautionary tale as to whether the focus on improving the quality of verifiable information by any possible amount is always optimal. Minor improvements in the quality of the verifiable information may backfire because they make reasonable actors overly cautious without affecting the reckless much. If instead, unverifiable information improves, we need to worry less. Reckless actors cannot hide behind claims that unverifiable information lets them act—at least if the authorities that hold them accountable reply rationally.

In light of our argument, policymakers should carefully think about the reporting standards they require from decision-makers. Indeed, more precise verifiable information may not necessarily lead to better decisions.

As a final thought, I want to emphasize that our argument holds although we make an assumption that some may argue is quite heroic: reporting and documenting imposes no cost to anyone in the economy. Taking those costs into account, of course, only strengthens our results and the implications for policymakers.

About the authors:

Aditya Kuvalekar is an Assistant Professor in Economics at the University of Essex. His research focuses on dynamic games, organisational economics, and market design.

https://sites.google.com/view/adityakuvalekar

Joao Ramos is an Assistant Professor of Finance and Business Economics at the University of Southern California Marshall. His research focuses on the analysis of the strategic decisions of agents to acquire, share, and use information.

Johannes Schneider is an Assistant Professor of Economics at uc3m. He works on Economic Theory and its applications to Innovation, Technological Change, and the Law.

Further Reading:

Kuvalekar, Aditya, Joao Ramos, and Johannes Schneider (2022). “The Wrong Kind of Information”, forthcoming RAND Journal of Economics. Available at: 2111.04172.pdf (arxiv.org)